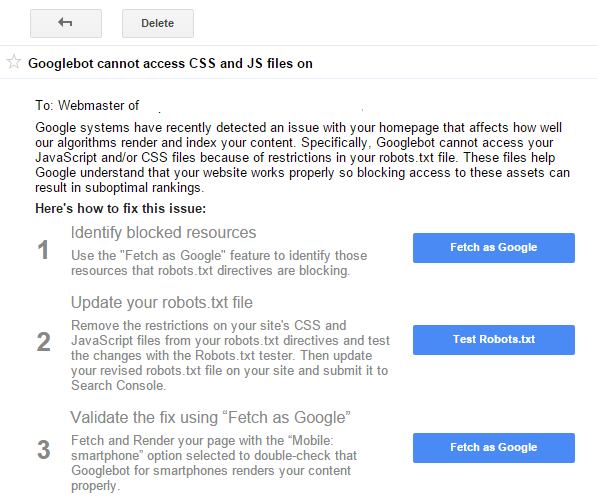

Late last year Google had written that blocking CSS and JavaScript through your sites robots.txt file could result in reduced indexation and rankings. Specifically:

To help Google fully understand your site’s contents, allow all of your site’s assets, such as CSS and JavaScript files, to be crawled. The Google indexing system renders webpages using the HTML of a page as well as its assets such as images, CSS, and Javascript files. To see the page assets that Googlebot cannot crawl and to debug directives in your robots.txt file, use the Fetch as Google and the robots.txt Tester tools in Search Console.

Fast track to today and messages are starting to appear in the Google Search Console stating exactly that.

You should be reviewing the Search Console frequently to check your sites overall health and capitalise on messages that Google sends. At least they’re telling you! Read our recent blog the Google Console Cheat Sheet.

Of course, if you need any assistance with this feel free to reach out.